In 1998 Hans Munthe-Kaas wrote a series of papers developing Runge-Kutta methods on Lie groups (and manifolds) where he uses the exponential map to reformulate ordinary differential equations on the Lie group as ordinary differential equations on the Lie algebra. For general Lie groups where an explicit expression of the differential of the exponential map and its inverse are not readily available, Munthe-Kaas uses a series expansion in terms of iterated Lie brackets to derive Runge-Kutta type integrators that incorporate correction terms expressed in terms of the commutators. In this short note, I will not explore this simplification by assuming that we can evaluate a (generalization of the) exponential map and its differential explicitly. Such formulas are easy to come by when working with low-dimensional matrix Lie groups like \(\newcommand{\SE}{\mathrm{SE}}\SE(3)\). \(\newcommand{\Msf}{\mathsf{M}}\newcommand{\Vsf}{\mathsf{V}}\newcommand{\Esf}{\mathsf{E}}\newcommand{\Fsf}{\mathsf{F}}\newcommand{\Circles}{\mathscr{C}}\newcommand{\Spheres}{\mathscr{S}}\newcommand{\Willmore}{\mathcal{W}}\newcommand{\RP}{\mathbb{R}\mathrm{P}}\newcommand{\RR}{\mathbb{R}}\newcommand{\CC}{\mathbb{C}}\newcommand{\HH}{\mathbb{H}}\newcommand{\CP}{\CC\mathrm{P}}\newcommand{\g}{\mathfrak{g}}\newcommand{\Ad}{\operatorname{Ad}}\newcommand{\SE}{\mathsf{SE}}\newcommand{\se}{\mathsf{se}}\newcommand{\ad}{\operatorname{ad}}\newcommand{\SO}{\mathsf{SO}}\newcommand{\cay}{\operatorname{cay}}\)

To fix some notation let \(G\) be a Lie group with Lie algebra \(\newcommand{\g}{\mathfrak{g}}\g\). We will be interested in reformulating an ordinary differential equation of the form

\[

\begin{cases}

\frac{d}{dt}g(t) = f(t,g(t)),\\

g(t_0) = g_0,

\end{cases}

\]

on \(G\) into an ordinary differential equation on \(\g\); here, \(t\in[t_0,t_1],\) \(g(t)\in G,\) and \(f(t,g(t))\in T_{g(t)}G.\)

Lie Group Preliminaries

To keep this note self-contained, we need to quickly review a couple of basic concepts related to Lie groups. Every element \(g\in G\) induces left- and right-translation map \(L_g:G\to G\) and \(R_g:G\to G\) defined as \[ L_g(h) = g\cdot h, \qquad R_g(h) = g\cdot h,\] respectively. Their differentials are denoted by \(dL_g,dR_g:TG\to TG\). We can use these maps to left-trivialize (or right-trivialize) the tangent bundle, identifying \(TG\) with \(G\times\g\) via the map \((g,\sigma)\in G\times\g\mapsto dL_g(\sigma)\) (or via \((g,\sigma)\mapsto dR_g(\sigma)\)). For matrix Lie groups, these multiplication maps along with their differentials will just be described by matrix multiplication. However, it is sometimes more convenient to use alternative parameterizations of certain Lie groups (for example, using quaternions to describe rotations); in such cases, it is important to distinguish between the translation and its differential (since they act on different spaces; for example, \(L_g:S^3\to S^3\) is given by quaternion multiplication, but \(dL_g : \RR^3\to T_gS^3, \) where we use some bases of \(T_1S^3\) of purely imaginary quaternions to identify \(T_1S^3\cong\RR^3\)). There is another important map \(\Ad_g:G\to G\) that is defined by conjugation \(\Ad_g = L_g\circ R_{g^-1}. \) By a slight abuse of notation, we also denote the induced action on the Lie algebra also by \(\Ad_g:\g\to\g\); by the chain rule \[ \Ad_g(\sigma) = dL_g\circ dR_{g^{-1}}(\sigma) \] for \(\sigma\in \g.\)

To start our analysis, let us left-trivialize \(TG\) to identify it with \(G\times\g\) so that we can write the evoluation as

\[

\begin{cases}

\frac{d}{dt}g(t) = g(t)Y(t,g(t)), \\

g(t_0) = g_0,

\end{cases}

\]

where now \(Y(t,g(t)) = dL_{g(t)^{-1}}f(t,g(t))\in\g\). When we write things like \(gY\) for \(g\in G\) and \(Y\in\g\) we really mean \(dL_{g}(Y) \in T_gG\).

To describe the evolution on \(\g\) we introduce a map \(\tau:\g\to G\) so that we may parameterize \(G\) by \(\g\). There are a couple of properties that \(\tau\) needs to satisfy. Let \(e\in G\) be the identity element of the group. Assume that \(\tau(0)=e\) and that \(\tau\) is a local diffeomorphism around \(0\). Furthermore, assume that \(\tau\) is analytic in a neighborhood of the origin, and that \(\tau(\sigma)\tau(-\sigma) = e\). These properties ensure that \(\tau\) defines an analytic chart (and by right-trivialization, an atlas) on \(G\). The most important tool that we will use is the right-trivialized differential of \(\tau\).

Definition 1. The right-trivialized differential of \(\tau\) is the function \(d\tau:\g\times\g\to\g\) which satisfies \[ D\tau(\sigma)\cdot \delta = dR_{\tau(\sigma)}d\tau_{\sigma}(\delta) \] for all \(\delta\in\g\).

We write \(d\tau_{\sigma}(\delta)\) to denote \(d\tau(\sigma,\delta)\) and note that \(d\tau\) is linear in its second argument. The property that will allow us to simplify the description of the evolution is that \[ d\tau_{\sigma}(\delta) = \Ad_{\tau(\sigma)}d\tau_{-\sigma}(\delta). \]

To show this, we differentiate \(\tau(-\sigma) = \tau(\sigma)^{-1}\) to obtain \[ -dR_{\tau(-\sigma)}d\tau_{-\sigma}(\delta) = -dL_{\tau(-\sigma)}dR_{\tau(-\sigma)}(D\tau(\sigma)\cdot\delta) = -dL_{\tau(-\sigma)}d\tau_{\sigma}(\delta). \]

Inverting \(dL_g\), yields the desired result

\[

dR_{\tau(-\sigma)}d\tau_{-\sigma}(\delta) = dL_{\tau(-\sigma)}d\tau_{\sigma}(\delta) \iff d\tau_{\sigma} = \Ad_{\tau(\sigma)}d\tau_{-\sigma}.

\tag{1}

\]

The last piece of machinery that we will need is the inverse of the right-trivialized differential.

Definition 2. The inverse of the right-trivialized differential is the function \(d\tau^{-1}:\g\times\g\to\g\) given by \(d\tau^{-1}_{\sigma} = (d\tau_{\sigma})^{-1}\in\operatorname{End}(\g)\).

The map \(d\tau^{-1}\) intuitively describes the differential of \(\tau^{-1}\). The ordinary differential equation on the Lie algebra is considerably simplified using \(d\tau^{-1}\), and since it is only formulated on the Lie algebra we can also often give explicit matrix expressions with respect to a basis of \(\g\).

The special Euclidean group of \(\RR^3\) is denoted \(\SE(3)\) and it can be written as a semidirect product \(\SE(3)=\SO(3)\ltimes\RR^3\); every Euclidean motion \(g\in\SE(3)\) is of the form \(g(x) = Ax + b\) where \(A\in\SO(3)\) is the rotational component and \(b\in\RR^3\) is the translational component. \(\SE(3)\) plays an important role in formulating rigid body dynamics, and so it is worthwhile to take some time to look at examples of local diffeomorphisms relevant in applications.

The Lie algebra \(\se(3)\) of infinitesimal Euclidean motions can be identified with \(\RR^3\times\RR^3\) where \(Y=(\omega,v)\in\se(3)\) describes the infinitesimal rotation \(\omega\times\) and the infinitesimal translation by \(v\). The exponential map is then the map \(\exp:\se(3)\to\SE(3)\) given by integrating the infinitesimal Euclidean motion for unit time. An explicit expression for the exponential map on \(\SE(3)\) is given in Review of the exponential and Cayley map on SE(3) as relevant for Lie group integration of the generalized Poisson equation and flexible multibody systems [1, Eqn. 2.25]. Let \(Y=(\omega,v)\in\se(3)\); then

\[ \exp(Y) = (\exp^{\SO(3)}(\omega), d\exp^{\SO(3)}_\omega(v)). \]

In Lie Group Integrators for Animation and Control of Vehicles [2, Eqn. 11]. the explicit formula \[ \exp^{\SO(3)}(\omega) = \begin{cases}\operatorname{id}_{\RR^3} & \text{if }\omega = 0, \\ \operatorname{id}_{\RR^3} + \tfrac{\sin\|\omega\|}{\|\omega\|}\omega\times + \frac{1-\cos\|\omega\|}{\|\omega\|^2}(\omega\times)^2 & \text{if }\omega\neq 0, \end{cases} \]

known as Rodrigues formula, is given. Similarly, the differential of the exponential map is given in [2, Eqn. 13]:

\[

d\exp^{\SO(3)}_{\omega}(v) = \begin{cases}

\operatorname{id}_{\RR^3} & \text{if }\omega=0, \\

\operatorname{id}_{\RR^3} + \frac{1-\cos\|\omega\|}{\|\omega\|^2}(\omega\times) + \frac{\|\omega\|-\sin\|\omega\|}{\|\omega\|^3}(\omega\times)^2 & \text{if }\omega\neq 0,

\end{cases}

\]

from which we can compute the exponential map on \(\SE(3)\). The last thing that we need is the inverse of the right-trivialized differential of the exponential map on \(\SE(3)\). In [2, Eqn. 14] the following explicit expression is given

\[

d\exp^{-1}_{Y} = \operatorname{id}_{\RR^3\times\RR^3} – \frac12\ad_{Y} + \frac{1}{12}\ad_Y^2,\qquad \ad_Y = \begin{pmatrix}\omega\times & 0 \\ v\times & \omega\times\end{pmatrix}.

\]

The Cayley map for \(\SE(3)\) is a well-known approximation of the exponential map and can be explicitly written as \begin{equation*}

\begin{aligned}

\cay(Y) = \Big( & \operatorname{id}_{\RR^3} + \tfrac{4}{4 +

|\omega|^2}((\omega\times) + \tfrac12(\omega\times)^2),

\tfrac{2}{4 + |\omega|^2}(2 v + \omega\times v) \Big)

\end{aligned}

\end{equation*}

for \(Y=(\omega,v)\in\se(3)\) [1, Eqn. 3.14] The first entry describes the rotational component of the resulting Euclidean motion and the second entry the translational component.

The inverse of the right-trivialized differential \(d\cay^{-1}\colon \se(3)\times\se(3)\to \se(3)\) [1, Eqn. 3.17]. It is linear in its second argument, and so under the identification of \(\se(3)\) with \(\RR^6\) it is identified with a \((6\times 6)\)-matrix. Explicitly, it is given by

\begin{align}

d\cay^{-1}_{Y} =

\begin{pmatrix}

\operatorname{id}_{\RR^3} – \tfrac12(\omega\times) + \tfrac{1}{4}\omega\otimes\omega & 0 \\

-\tfrac12((v\times) – \tfrac12 (\omega\times)(v\times) ) & \operatorname{id}_{\RR^3} – \tfrac12(\omega\times)

\end{pmatrix}.

\label{eq:dtau_inv}

\end{align}

Runge-Kutta-Munthe-Kaas methods are now obtained by using \(\tau\) to parameterize the evolution of the group variables by the Lie algebra and applying a standard Runge-Kutta method on the resulting equations on the Lie algebra. Parameterize \(g(t) = g_0 \tau(\sigma(t))\) by \(\sigma:[t_0,t_1]\to \g\); computing its derivative \[ \frac{d}{dt}g(t) = dL_{g_0} dR_{\tau(\sigma(t))}d\tau_{\sigma(t)}\dot{\sigma}(t) \] using the definition of the right-trivialized differential yields the equivalence

\begin{align*}

\tfrac{d}{dt}g(t) = g(t)Y(t,g(t)) & \iff dL_{g_0} dR_{\tau(\sigma(t))}d\tau_{\sigma(t)}\dot{\sigma}(t) = dL_{g_0} dL_{\tau(\sigma(t))} Y(t,g(t)) \\ & \iff \Ad_{\tau(-\sigma(t))}d\tau_{\sigma(t)}\dot{\sigma}(t) = d\tau_{-\sigma(t)}\dot{\sigma}(t) = Y(t,g(t)),

\end{align*}

where in the last step we used the relationship \(\Ad_{\tau(-\sigma)}d\tau_{\sigma} = d\tau_{-\sigma}\) from Equation 1. Using the inverse right-trivialized differential we can rewrite the ordinary differential equation as

\[

\begin{cases}

\tfrac{d}{dt}\sigma = d\tau^{-1}_{-\sigma}(Y), \\

\sigma(0) = 0.

\end{cases}\tag{\(*\)}

\]

Since we have reformulated the group evolution on the Lie algebra, there is no reason we need to stop at Runge-Kutta methods; any numerical integration scheme applicable (so the vector field needs to satisfy certain regularity requirements) on vector spaces can be used to integrate the evolution on a Lie group. In the remainder of this note, we will only consider Runge-Kutta methods.

Notice that the evolutions on \(G\) and \(\g\) are equivalent, irrespective of the choice of \(\tau\), and that the convergence of a Runge-Kutta method on the Lie group immediately follows from the convergence on vector spaces. The incorporation of the differential of \(\tau\) results in an evolution that is distinct from the one that arises by naïvely using \(\tau\) to ensure that the velocities stay in the group.

An \(s\)-stage Runge-Kutta method is specified by a Butcher tableau, which is specified by a matrix of coefficients \(a\in\RR^{s\times s}\) and a vector \(b\in\RR^{s}\); defining \(c\in\RR^{s}\) by \(c_i = \sum_{j=1}^{s}a_{ij}\) the Butcher tableau is

\[

\renewcommand\arraystretch{1.2}

\begin{array}

{c|cccc}

c_1 & a_{11} & \cdots & a_{1s} \\

\vdots & \vdots & \ddots & \vdots \\

c_s & a_{s1} & \cdots & a_{ss} \\

\hline

& b_1 & \cdots & b_s

\end{array}

\]

Now consider the first ordinary differential equation \(\dot{\sigma}(t) = \varphi(t,\sigma(t))\) on a vector space \(\sigma(t)\in \g\). Given a Butcher tableau as above, the corresponding Runge-Kutta approximation (with step size \(h>0\)) is given by

\[

\begin{align}

k_{n}^i & = \varphi\Big(t_n + c_i h, \sigma_n + h \sum_{j=1}^{s}a_{ij}k_n^j\Big), \\

\sigma_{n+1} & = \sigma_n + h\sum_{j=1}^{s}b_j k_{n}^j.

\end{align}

\]

If \(a_{ij} = 0\) for all \(j\geq i\) then the method is called explicit; otherwise it is called implicit. The order of the trunctation error is not directly related to the number of stages; in fact, it is still an open problem to determine the minimum number of stages needed to reach a desired order of convergence.

Applying this to the evolution \((*)\), we have that \(\sigma_n=0\) and so \[ \sigma_{n+1} = h\sum_{j=1}^{s}b_j k_{n}^j, \] which when we go back to the group variables means that

\[

g_{n+1} = g_n\tau\Big(h\sum_{j=1}^{s}b_j k_{n}^j\Big).

\]

The intermediate stages involve computing for \(i=1,\dots,s\)

\[

k_{n}^i = d\tau^{-1}_{-h \sum_{j=1}^{s}a_{ij}k_n^j}Y\Big(t_n + c_i h, g_n\tau\big(h \sum_{j=1}^{s}a_{ij}k_n^j\big)\Big). \\

\]

Definition 3. The \(s\)-stage Runge-Kutta-Munthe-Haas method associated to the Butcher tableau above is given by

\begin{align}

k_n^i & = d\tau^{-1}_{-h \sum_{j=1}^{s}a_{ij}k_n^j}Y\Big(t_n + c_i h, g_n\tau\big(h \sum_{j=1}^{s}a_{ij}k_n^j\big)\Big), \quad i=1,\dots,s, \\

g_{n+1} & = g_n\tau\Big(h\sum_{j=1}^{s}b_j k_{n}^j\Big).

\end{align}

Going with the Flow

Let us take a look at a specific example and describe the specific computations that need to be done to use RK4 to integrate the equations of motion of a rigid body.

Rigid body dynamics are one of the first examples that one encounters of an ordinary differential equation on a Lie group. Consider a rigid body described by an immersion \(\gamma:B\to\RR^3\) of a compact 3-manifold \(B\) with boundary, endowed with a finite non-negative Radon measure \(\rho\) describing its mass density. The motion of the rigid body can be described by a time-dependent Euclidean motion \(t\mapsto g_t\in\SE(3)\). The kinetic energy of the body at time \(t\) is \[ E_t = \int_{B}\tfrac12|(g_t\circ \gamma)’|^2~d\rho = \int_{B}\tfrac12|\omega\times \gamma + v|^2~d\rho,\] where \(g^{-1}g’ = Y = (\omega,v)\in\se(3)\) is the left-trivialized time derivative of \(g\). For each time \(t\) the kinetic energy is quadratic form in \(Y\): \[ \ell(t,Y) = \tfrac12\langle IY\mid Y\rangle,\] for some \(I:\se(3)\to\se(3)^*\).

A slight shift of perspective also allows us to formulate the equations of motion for a shape-changing body similarly. A shape changing body is instead described by a time-dependent family of immersions \(t\mapsto \gamma_t\) of \(B\) and a time-dependent family of finite non-negative Radon measures \(t\mapsto \rho_t\) on \(B\). Since the shape of the body is already given, the degrees of freedom are the same as those for a rigid body: a time-dependent family of Euclidean motions \( t\mapsto g_t\in\SE(3). \) The kinetic energy of the body at time \(t\) is \[ E_t = \int_{B}\tfrac12|\omega\times\gamma_t + v + \gamma_t’|^2~d\rho_t, \] which now has a time-dependent inertia tensor \(I_t:\se(3)\to\se(3)^*\) and additional linear and constant terms

\[

\ell(t,Y) = \tfrac12\langle I_t Y\mid Y\rangle + \langle \mu^0_t\mid Y\rangle + E^0_t.

\]

The total energy over a time interval \([t_0,t_1]\) is \[ \mathcal{L}(g) = \int_{t_0}^{t_1}\ell(t,Y)~dt. \]

An appeal to Hamilton’s principle of least action implies that the equations of motion are the Euler-Lagrange equations of the total kinetic energy of the system. When formulated using the left-trivialized Lagrangian \(\ell\) these equations are known as Euler-Poisson equations on \(G\): \[ \frac{d}{dt}g = gY\,\qquad \frac{d}{dt}\mu = \ad^*_{Y}\mu,\qquad \mu = I_tY + \mu^0_t. \] See our recent paper Going with the Flow for a more detailed discussion of how the kinetic energy of the fluid can also be approximated and incorporated into the dynamics through a modification of the \(\se(3)\)-inertia tensor and for a derivation of the equations of motion through a variational principle. The first equation is known as the reconstruction equation since it reconstructs the group variables from velocities, and the second equation describes the conservation of momentum (written in the body fixed frame). We can now take either the exponential map or Cayley map for \(\tau\) and reformulate the reconstruction equation at the level of the Lie algebra (eliminating \(Y\) from the equation using \(IY+\mu^0=\mu\)):

\[ \frac{d}{dt}\sigma = d\tau^{-1}_{-\sigma}(I^{-1}(\mu – \mu^0))\,\qquad \frac{d}{dt}\mu = \ad^*_{I^{-1}(\mu-\mu^0)}\mu. \]

In Going with the Flow we used a variational integrator to integrate these equations since they exhibit increased stability at low resolutions with large time steps. That being said, comparable performance can often be achieved by using a simpler explicit method (RK4).

The Butcher tableau for RK4 is

\[

\renewcommand\arraystretch{1.2}

\begin{array}

{c|cccc}

0 & \\

\tfrac12 & \tfrac12 \\

\tfrac12 & 0 & \tfrac12 \\

1 & 0 & 0 & 1 \\

\hline

& \tfrac16 & \tfrac13 & \tfrac13 & \tfrac16

\end{array}.

\]

The resulting Runge-Kutta method for integrating the reconstruction equation alone is

\[

\begin{align}

k_n^1 & = Y(t_n, g_n) \\

k_n^2 & = d\tau^{-1}_{-\tfrac{h}2k_n^1}Y\big(t_n + \tfrac12h, g_n\tau\big( \tfrac{h}{2}k_n^1 \big)\big) \\

k_n^3 & = d\tau^{-1}_{-\tfrac{h}2k_n^2}Y\big(t_n + \tfrac12h, g_n\tau\big( \tfrac{h}{2}k_n^2 \big)\big) \\

k_n^4 & = d\tau^{-1}_{-hk_n^3}Y\big(t_n + h, g_n\tau(hk_n^3)\big) \\

\\

g_{n+1} & = g_n\tau\big(h\big( \tfrac16k_n^1 + \tfrac13k_n^2 + \tfrac13k_n^3 + \tfrac16k_n^4 \big)\big).

\end{align}

\]

The actual implementation is only slightly more involved since \(Y\) also depends on \(\mu\), and so we need to couple the reconstruction equation to the evolution of \(\mu\) as follows:

\[

\begin{align}

Y_n^1 & = Y(t_n, g_n, \mu_n) \\

k_{n,g}^1 & = Y_n^1 \\

k_{n,\mu}^1 &= \ad^*_{Y_n^1}\mu_n \\\\\\

Y_n^2 & = Y\big(t_n + \tfrac12h, g_n\tau\big( \tfrac{h}{2}k_{n,g}^1 \big),\mu_n + \tfrac12h k_{n,\mu}^1\big) \\

k_{n,g}^2 & = d\tau^{-1}_{-\tfrac{h}2k_{n,g}^1}Y_n^2 \\

k_{n,\mu}^2 & = \ad^*_{Y_n^2}(\mu_n + \tfrac12h k_{n,\mu}^1) \\\\\\

Y_n^3 & = Y\big(t_n + \tfrac12h, g_n\tau\big( \tfrac{h}{2}k_{n,g}^2 \big), \mu_n + \tfrac12h k_{n,\mu}^2\big) \\

k_n^3 & = d\tau^{-1}_{-\tfrac{h}2k_{n,g}^2}Y_n^3 \\

k_{n,\mu}^3 & = \ad^*_{Y_n^3}(\mu_n + \tfrac12h k_{n,\mu}^2) \\\\\\\

Y_n^4 & = Y\big(t_n + h, g_n\tau(hk_{n,g}^3),\mu_n + h k_{n,\mu}^3\big) \\

k_n^4 & = d\tau^{-1}_{-hk_n^3}Y_n^4 \\

k_{n,\mu}^4 & = \ad^*_{Y_n^4}(\mu_n + h k_{n,\mu}^3) \\\\\\\

g_{n+1} & = g_n\tau\big(h\big( \tfrac16k_{n,g}^1 + \tfrac13k_{n,g}^2 + \tfrac13k_{n,g}^3 + \tfrac16k_{n,g}^4 \big)\big) \\

\mu_{n+1} & = \mu_n + h\big(\tfrac16k_{n,\mu}^1 + \tfrac13k_{n,\mu}^2 + \tfrac13k_{n,\mu}^3 + \tfrac16k_{n,\mu}^4\big).

\end{align}

\]

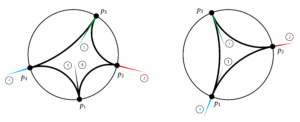

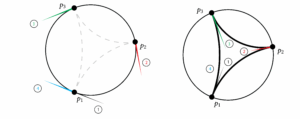

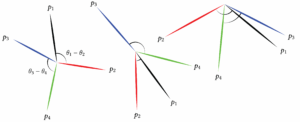

This method is a bit simpler than the variational integrator since it is an explicit update. Below is the resulting discrete evolution of underwater rigid bodies (cf. Underwater Rigid Body Dynamics).

The same equations can be used to compute the motion of an eel from its shape change: